Interactive Anatomy and Physiology Augmented Reality Application

ABSTRACT

Augmented reality (AR) is a wide-reaching technology that superimposes digitized objects onto the real-world environment. The many applications and studies utilizing augmented reality reveal its increasing use and accuracy and have been implemented into many fields including entertainment, research, and surgery. In this study, anatomical and physiological components were implemented into an AR application that allow the viewer to interact with 2D bone images that are superimposed over their correct placement on the body. The application also allowed the identification of the designated bones, muscles, and joints in the limbs by selection with the right hand. The exploration and game modes allow the viewer to learn and apply his or her knowledge in an engaging way. The prototype was tested for user friendliness, accuracy, and engagement by graduate and undergraduate students through surveys before and after they had experienced the prototype application demonstration. This prototype was a proof of concept application, revealing the potential effectiveness of AR technologies as cost effective and engaging educational tools that can be made available to many students. This initial prototype will form the basis for a second, updated prototype that will be implemented into the curriculum of a public high school in Nashville, Tennessee this coming year.

INTRODUCTION.

Augmented reality (AR) is a trending, wide-ranging technology incorporated into education, research, entertainment, and surgery [1,2,3,4]. Typical AR applications superimpose digital components into the real-world environment and can be used through handheld devices, cameras, and other technologies. Elementary to high school students alike have used AR handheld devices and applications in the classroom to better motivate students and enhance knowledge by learning more about the technology itself as well as the material in the application [1,3]. Furthermore, AR has found its way into surgery as an efficient and precise tool to visualize images of a 3D brain and the patient’s actual anatomy to allow the surgeon to make the best movements to remove a tumor [4,5]. The ability to improve precision and sophistication of data collection in these systems has increased through the past few decades, creating new techniques and improvements to each AR system [3,6].

AR has also been used as a “magic mirror” for organ rendering and visual placement as an educational tool [1]. This interactive method has inspired many other research opportunities as well as increased the prevalence of this type of application in education [1,7]. One study utilized image processing to create a digital 3D human musculoskeletal system that a viewer could interact with through both augmented and virtual reality (VR) in an effort to learn more about human movement [7]. These types of interactive AR are especially useful in education due to the engaging properties that come with the ability to interact with superimposed images in relation to the physical body in real time [3].

As interactive AR projects have been proven to increase the engagement and conceptual understanding of students, it is important to explore new subjects, methods, and capabilities to enhance learning through this new technology [1,2]. Furthermore, anatomy and physiology are important components in the education of many middle to high school students as biomechanics and human biology are significant to many fields of study. However, most current high school classrooms lack the technology that could create a more fun and engaging learning environment than more traditional types of learning [8]. The technologies exist but there is a gap created between the technology and the classroom, but it is important to look at the opportunity to increase the prevalence of these types of effective and engaging technologies in the classroom.

This project sought to create an educational tool in the form of an interactive AR application that incorporates anatomical and physiological components that are applied to both an exploration and a game mode. The exploration mode allows the viewer to identify major bones in the limbs, and the game mode allows the viewer to test their newfound knowledge in the form of a quiz game. The major bone, muscle, and joint components of human limbs were utilized and implemented into this application to create the learning environment.

This study is novel in its use of musculoskeletal data to create an application identifying specific bone, muscle, and joint placement as it relates to the movement of the body, as well as the forthcoming implementation of a second version of this application into the curriculum of a local public high school. The use of this prototype application as the basis for an educational tool used to teach anatomy components helps to make this technology more accessible and more applicable to education in the classroom. The prototype application was tested by graduate and undergraduate students at Vanderbilt University to evaluate the ease of use and involved teaching properties of this technology through surveys before and after test subjects experienced time using the AR application. The potential use of this technology in the classroom as an engaging and cost-effective teaching tool is very significant as education continues to progress and adapt to the changes of the world around it.

METHODS.

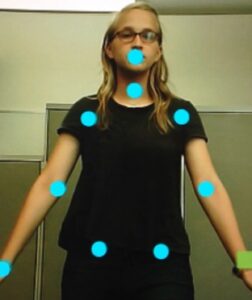

The objective of this study was to create an augmented reality application with anatomy and physiology components using the Orbbec Astra Pro body tracking sensor [9]. The Kinect body tracking device has been used in many previous AR projects [1,7], but the Orbbec Astra Pro provides an improved ability to accurately track 19 joints at a wider range of distances (Figure 1a). In order for the Orbbec device to function and connect to the computer correctly, the Astra SDK and driver from the Orbbec official website [9] were downloaded, along with Visual Studio 2017. A previous kinematics application from the Medical Image Analysis and Statistical Interpretation (MASI) lab was used as the template for the development of the program, and an undergraduate then converted the application from using the Kinect device to using the Orbbec Astra Pro camera [9]. The kinematics capabilities were disabled to create a basic framework utilizing the Orbbec camera, so that an application with new capabilities could be implemented.

A)

B)

Figure 1. (a) Orbbec body tracking skeleton with 19 accurately tracked joints [9] and (b) ellipses accurately drawn on joints of the top part of the body through Visual Studios program.

The application was coded using the C# language through Microsoft Visual Studio and utilized the Orbbec Astra Pro body tracking system. The application functions in a way that allows the viewer to select the desired 2D bone image to identify and view that specific bone as well as to see how it moves in relation to movements made by the viewer. The placement of major muscles in the limbs can also be identified, although 2D muscular images do not appear. This interactive technology has a broad range of applications from education to physical therapy that involve the study of anatomical placement and musculoskeletal movement.

Since it had not been used before in the lab and to get a better feel for the Orbbec camera, ellipses were drawn at each joint to be able to see the precision of the tracking with different movements (Figure 1b). It is important to note that when running the application, there should not be any objects between or very near the Orbbec device and the viewer of the application as this can confuse the body tracking capabilities of the device. Challenges concerning the depth of each 2D ellipse in a 3D environment were solved by implementing the ellipse code within the definition of the parameters of each joint so that the ellipse will track the coordinates of that joint. This, however, limited the ability to create a function or command using more than one joint, which was necessary later in the coding process.

Two-dimensional bone images were superimposed over their correct placement on the body as defined by two specific joint positions. At first, the images were solely defined with x and y positions which created disillusionment when the viewer stood at varying distances from the camera. This depth problem was eventually solved through the process of defining each specific joint into the ‘joint’ class to be able to access the depth position x and y for each designated joint. The bone images then followed the joints to which they were attached with the exception of select movements made by the viewer that flipped the images so that they were directly perpendicular to the correct placement on the body. This occurred when the arms were extended above the head, so to fix this error, each image was coded to flip horizontally when a certain joint placement occurred in the movement of the viewer. The images then followed the joints fairly precisely.

Since the bone images were defined as rectangular images, a rectangle on the right hand was also created to provide selecting capabilities when the right hand would hover over the desired image. If the right-hand rectangle intersects with a specific image, a certain text will appear, identifying the bone or muscle placement depending on which mode the viewer selected when the application opened. This function was implemented into the skeletal exploration mode.

The game modes were created as a fun way to test the knowledge learned in the exploration mode about the bones in the arms (humerus bone, radius and ulna bones) and legs (femur bone, tibia and fibula bones), as well as joint placement and type including hinge joints (elbow joint, knee joint) and ball in socket joints (shoulder joint, hip joint). This demonstrates the physiology components that show the function of different bones and joints and the ways they work together to create a movement. The viewer tries to get through each identification question in as few guesses as possible. To create the skeletal game mode, questions were created and ‘if’ statements aligned to define a correct or incorrect answer which would output “Correct!” or “Try Again” respectively. When the viewer reaches the correct bone, they move on to the next bone prompt.

The joint game mode was similar, but the prompts were focused on different types of joints, including hinge joints and ball and socket joints in the limbs. Throughout this project, the source code was committed regularly to the online subversion repository on the MASI Augmented Reality Mirror page in the NeuroImaging Tools & Resources Collaboratory (NITRC).

This application was tested by undergraduate and graduate students at Vanderbilt University to test the user friendliness and engaging properties of the prototype application. A pre-test and post-test survey were given to qualify the ratings of each of the desired properties measuring ease of use as well as experience and interest levels in both AR technologies and the musculoskeletal system. The test lasted for an average of 4 minutes, where the test subject was able to interact with the AR display using the Orbbec camera. The subject followed prompts on the application, and a facilitator answered any questions and provided additional help if necessary. The surveys tested for experience and interest levels in both augmented/virtual reality and the human musculoskeletal system before and after they experienced the prototype application, and each answer was selected on a scale from one (being the lowest) to five (being the highest) which were defined as different extremes depending on the question. For example, a question asking about the level of interest would be rated from a scale of 1 being low interest to 5 labelled as high interest, while a question asking about the overall experience of the application would be rated on a scale from dull to engaging.

RESULTS.

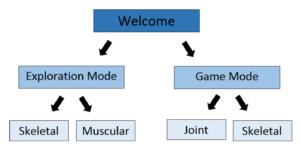

The resulting application has two available modes: exploration and game mode as seen in the flowchart in Figure 2.

Figure 2. This flowchart illustrates the basic structure and capabilities of the application as having skeletal and muscular exploration modes as well as having joint and skeletal game modes.

The skeletal exploration mode allows the viewer to see 2D bone images over their correct placement on the body and select the image to identify the designated bone. For the muscular exploration mode, the viewer can select and identify a muscle when their right hand hovers over that spot on the body, but there are no images overlaid because of the complexity of muscles in comparison to bones in a two dimensional environment.

In the game mode, the viewer can apply what they learned in exploration mode to a quiz game. The skeletal game shows the overlaid 2D bones, and a prompt tells the viewer to select a designated bone with their right hand. Once they have selected the correct bone on either limb, the quiz will move to the next question, or it will allow the viewer to try again until they succeed. The joint game mode is similar, but the viewer is prompted to select a specific type of joint.

A)

B)

Figure 3. a) The skeletal exploration mode allows the viewer to see the superimposed 2D bone images and identify them by selecting with their right hand. b) Prompts in the skeletal game mode ask the viewer to identify a specific bone by selecting it with their right hand, giving as many tries as they need to reach the correct answer.

This application was tested for user friendliness and engaging properties of the prototype application (N=13). Sixty-nine percent of subjects said that their experience level with augmented reality increased by at least one point (from an average of about a 2 out of 5 experience rating to an average of 3 out of 5 after experimenting), and when asked about how engaged the viewer was throughout their experience with the AR application, the average rating was 4.4 out of 5 being very engaging. However, the average rating of the ease of use of the prototype was a 3.7 out of 5 being very easy to use (following instructions, moving frames, etc.).

DISCUSSION.

Augmented reality is an engaging and effective educational tool due to its hands-on nature and increasing accuracy [3,7]. The prototype application presented in this study helps to fulfill the need for user friendly, cost effective teaching tools that can be made easily available to elementary, middle, and high school students in the classroom. Anatomy and physiology are important educational topics that have an effect on many career types and fields of study [11,12]. The anatomy teaching components and game mode are put in place in an attempt to increase the engagement and interest of the viewer and also to give a tangible view of the function of different bones that work together to create a motion.

The prototype application testing by students at Vanderbilt University revealed the rates of increased growth and interest in augmented reality technology as well as the musculoskeletal system. When paired with the high percentages of people who learned from this application, one can deduce the engaging teaching properties of this prototype application. However, with the somewhat lower rating of ease-of-use, one can see that the flow of the application is somewhat limited and affects the experience of the viewer.

The survey was able to show the differences before and after experience with the application, but there was sometimes significant help given by the director to aid in using the application and the interface did have some glitches that inhibited ideal use. In the future, quantitative analysis of the accuracy of this application would be important to improve the user interface and overall effectiveness of the application.

As seen by the occasional glitches and lower rating of the ease of use of the application during testing, several updates should be implemented in the future to increase the effectiveness of this technology. Additionally, this initial prototype lacks some important features that need to be implemented, such as 3D musculoskeletal models, which would address some of the challenges that are seen in the prototype application from superimposing 2D bone images in a 3D environment. Rotational or complicated movements do not always reveal the most ideal bone images as the prototype utilized strictly 2D. Furthermore, a wider range and depth of bone and muscle images would allow for a better representation of the musculoskeletal system in this educational application.

An updated version of this prototype was further developed by an undergraduate in the MASI lab to address the limitations of the proof of concept application. In the coming school year, the updated prototype will be directly implemented into the curriculum of a public high school in Nashville with lessons in anatomy, kinematics, and computer science.

ACKNOWLEDGEMENTS.

I would like to thank my PI, Dr. Bennett Landman for helping me through the processes of this research project. I would like to thank Dr. Bruce Damon for his help and guidance on the musculoskeletal aspects of this project, as well as Dr. Brown and Dr. Campbell for advising me through the project. I would also like to thank Dr. Swartz and Anjie Wang for their support as well as the other lab members who helped answer my many questions.

REFERENCES.

- M. Meng, P. Fallavollita, T. Blum, U. Eck, C. Sandor, S. Weidert, J. Waschke, N. Navab, Kinect for interactive AR learning. IEEE International Symposium of Mixed and Augmented Reality. 2013, 277-278 (2013).

- A. Petrou, J. Knight, S. Savas, S. Sotiriou, M. Gargalakos, E. Gialouri, Human factors and qualitative pedagogical evaluation of a mobile augmented reality system for science education used by learners with physical disabilities. Personal and Ubiquitous Computing. 13, 243-250 (2009).

- M. Billinghurst, A. Duenser, Augmented Reality in the classroom. Classroom, 45, 56-63, (2012). [Online].

- J. Shuhaiber, Augmented Reality in Surgery. Archives of Surgery, 139, 170-174, (2004).

- N. Martirosyan, J. Skoch, J. Watson, M. Lemole, M. Romanowski, R. Anton, Integration of Indocyanine Green Videoangiography with operative microscope: Augmented Reality for interactive assessment of vascular structures and blood flow. Operative Neurosurgery. 11, 252-258, (2015).

- T. Fukuda, T. Zhang, N. Yabuki, Improvement of registration accuracy of a handheld augmented reality system for urban landscape simulation. Frontiers of Architectural Research. 3, 386-397 (2014).

- A. Voinea, F. Moldoveanu, A. Moldoveanu, 3D model generation of human musculoskeletal system based on image processing: An intermediary step while developing a learning solution using Virtual and Augmented Reality. International Conference on Control Systems and Computer Science. 2017, 315-320 (2017).

- K. Hew and T. Brush, “Integrating technology into K-12 teaching and learning: current knowledge gaps and recommendations for future research,” Education Tech Research Dev. 55, 223–252 (2007).

- Develop with Orbbec (2018). (available at https://orbbec3d.com/develop/)

- Augmented Reality mirror (2018). (available at https://www.nitrc.org/projects/ar_mirror)

- Reasons to study anatomy & physiology (2018). (available at https://www.thecompleteuniversityguide.co.uk/courses/anatomy-physiology/reasons-to-study-anatomy-physiology/)

- M. Jensen, A. Mattheis, and A. Loyle, Offering an anatomy and physiology course through a high school-university partnership: the Minnesota model. Advances in Physiology Education. 37, 157–164 (2013).

Posted by John Lee on Tuesday, December 22, 2020 in May 2019.

Tags: Augmented reality, education, musculoskeletal, prototype