A Custom Object Detection and Text-To-Speech System for Enhanced User Interaction

ABSTRACT

The growing demand for assistive technologies has led to innovations aimed at improving accessibility for individuals with visual impairments. One such innovation is the integration of object detection and text-to-speech (TTS) technology, which can enhance real-time scene recognition. This research explores the integration of object detection and TTS technology for real-time scene recognition. The study aims to enhance accessibility by allowing a system to detect objects through a camera and verbally announce them, assisting individuals with visual impairments or in hands-free environments. Using the YOLOv8 model, trained on the COCO dataset, the system accurately identifies objects such as people, furniture, and everyday items. The detected objects are then stored in a dictionary and communicated audibly, with quantities (e.g., “one person, two chairs”) to ensure clarity. The experimental plan involved processing live video feeds and outputting spoken object detections with a 4-second pause between updates to maintain a clear, non-overlapping speech flow. Initial results show that the system can reliably identify and announce objects in real-time, though challenges with processing speed and accuracy under certain conditions suggest further refinement is necessary. The findings contribute to the development of assistive technologies, and future work should focus on optimizing performance for broader applications.

INTRODUCTION.

In recent years, object detection has emerged as a vital field within artificial intelligence (AI), revolutionizing industries such as surveillance, healthcare, autonomous driving, and assistive technologies. The ability of AI systems to recognize and classify objects in real time has broad implications, not only for technological advancement but also for enhancing human capabilities, particularly in tools for people with visual impairments [1]. Object detection models have seen rapid progress through various architectures such as convolutional neural networks (CNNs), advanced methods like You Only Look Once (YOLO), and Faster Region-based Convolutional Neural Networks (Faster R-CNN), achieving remarkable accuracy in real-world applications [2]. However, there remains a gap in bridging object detection technology with user-friendly interfaces, especially in terms of integrating speech feedback for broader accessibility.

A significant challenge in this field is improving how AI communicates detected objects to users in an intuitive and non-intrusive way. For people with visual impairments, real-time object recognition systems coupled with auditory feedback can be transformative, creating safer navigation and improving quality of life [2]. Previous studies have explored object detection systems using text-to-speech (TTS) technology, but many of these solutions are limited by repetitive feedback loops, overwhelming users with redundant information [3]. For example, if a system detects multiple people, current models may repeatedly announce “person, person, person…” rather than providing a concise summary of the detected objects and their quantities.

This research aims to address this issue by developing an object detection model that incorporates TTS to efficiently relay the number and types of objects detected without redundancy. By focusing on enhancing the human-AI interaction through structured and meaningful speech outputs, this study proposes a solution that condenses object recognition results (e.g., “1 person, 2 chairs”) and introduces pauses between announcements for better understanding. Optimizing the auditory feedback loop through a combination of TTS enhancements and training a model on diverse objects can significantly improve the accessibility of AI for users with visual impairments. This paper builds on existing work in the fields of AI object detection, accessibility, and human-computer interaction while addressing the critical gap of user-friendly auditory feedback systems for assistive technologies. Previous studies have emphasized the importance of real-time detection and feedback systems, but there is limited exploration into efficient speech outputs that reduce redundancy and improve the user experience [4]. The results of this study could have significant implications for both AI research and the broader accessibility technology landscape.

MATERIALS AND METHODS.

Dataset and Data Processing.

For this study, the COCO dataset was utilized due to its large-scale object detection annotations, which include images containing multiple objects labeled by category, bounding box, and segmentation information. The training images, consisting of 118,287 images, were sourced from the COCO 2017 training set, and validation images (5,000 images) were sourced from the corresponding validation set. The annotation files, which show object instances and their categories, were stored in JSON format and retrieved from the official COCO dataset repository [5].

Prior to training, the dataset underwent a preprocessing stage that involved resizing the images to 640×640 pixels to match the input sizes expected by the YOLOv8 model architecture. In addition, each image was normalized based on the mean and standard deviation of the dataset, which helps in model convergence during training. Random horizontal flips, color jittering, and other data augmentation techniques were applied to the training set to increase variability and prevent overfitting. The bounding box coordinates were also scaled according to the image size, ensuring accurate annotations.

AI Model.

This project used YOLOv8, a state-of-the-art deep-learning model designed for real-time object detection [6]. YOLOv8 builds on previous iterations by increasing detection speed and accuracy, making it a great choice for applications involving multiple objects in different environments. The model was fine-tuned on the COCO Dataset using a learning rate of 0.001, batch size of 16, and momentum of 0.937. The model was trained for 100 epochs, leveraging the NVIDIA GTX 1660 GPU for accelerated computations.

In addition to the default YOLOv8 architecture, modifications were made to the confidence threshold to reduce false positives. The final model was trained using a combination of stochastic gradient descent (SGD) optimizers for better convergence. The loss function combined classification, localization, and objectless losses, while Intersection over Union (IoU) was used as the primary metric for bounding box accuracy.

\[IoU=\frac{Area\ of\ Overlap}{Area\ of\ Union}\tag{1}\]

Text-to-Speech Integration.

To enable auditory feedback for detected objects, pyttsx3, a PythonTTS library, was integrated into the system [7]. Once the model detected objects in an image, their labels and counts were passed to pyttsx3 to convert the information into spoken output. This system was created to count similar objects (e.g., “1 person, 2 chairs”) to prevent repetitive feedback. A 4-second pause was programmed between announcements to improve clarity and prevent overlapping of speech. The TTS system’s volume and speech rate were optimized to suit different environmental conditions, ensuring that users, particularly those with visual impairments, could clearly understand the object detection results.

Evaluation Metrics. The model’s performance was assessed using mean average precision (mAP) at different IoU thresholds, which evaluates the model’s precision in detecting objects. Additional metrics such as precision, recall, and F1-score were calculated to further assess the trade-off between true and false positives. The text-to-speech performance was qualitatively evaluated through user feedback while also focusing on the clarity and efficiency of the speech output during real-world object detection tests.

\[mAP = \frac{1}{N} \sum_{c=1}^{N} AP_c \tag{2}\]

RESULTS.

The performance of the YOLOv8 model was evaluated based on various metrics, including mAP and IoU values. The dataset utilized for testing consisted of 5,000 images with 36,781 ground annotations.

The Area of Overlap is the intersection area between the predicted bounding box and the ground truth box, and the Area of Union is the total area covered by both boxes (see Fig. S1).

The average IoU was computed over all detected instances, having a value of 0.7204326391220093. This metric is critical as it reflects the model’s accuracy in predicting the location of objects.

The mAP was also computed across different IoU thresholds, specifically at 0.7.

Where N is the number of classes, and APC is the Average Precision class c, calculated as:

\[AP_c=\sum_r Precision(r)∆r\tag{3}\]

where r represents the recall levels. To provide a concrete example, the model achieved a mAP of 0.754 across all classes. This value means that, on average, the precision of object detections across all categories was 75.4% when considering only predictions with a high degree of overlap. For reference, state-of-the-art models like YOLOv8 on the full COCO dataset typically achieve mAP values between 0.5 and 0.6. Therefore, a mAP of 0.754 indicates outstanding performance.

Model Predictions and Ground Truth Comparisons.

IoU values range from 0 to 1, with higher values indicating better overlap between the predicted and ground truth bounding boxes. In most object detection benchmarks, an IoU above 0.5 is considered acceptable, and values above 0.75 are considered strong.

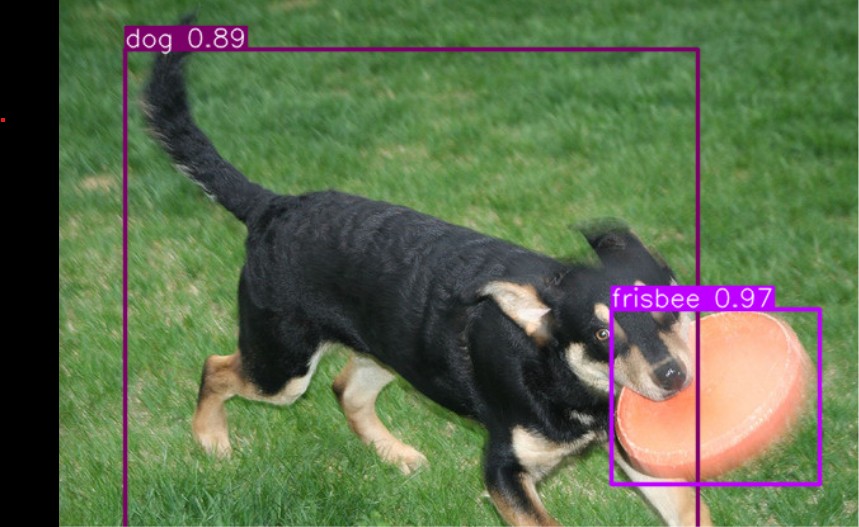

To evaluate the performance of the YOLOv8 model, several test images from the validation set were analyzed. One representative example is Image ID 221693, which contains a dog and a frisbee. Figures 1 and 2 show the same image with different annotations: Figure 1 displays the predicted bounding boxes generated by the model, while Figure 2 shows the ground truth bounding boxes from the dataset annotations.

The model successfully identified the dog and frisbee closely, as shown in Figure 1. The YOLOv8 model achieved an Intersection over Union (IoU) score of 0.7578 for the dog in Image ID 221693, indicating accurate localization. Additionally, the IoU score for the frisbee in the same image was 0.9011, reflecting higher localization accuracy for this object. The corresponding model confidence scores for these predictions were 0.89 for the dog and 0.97 for the frisbee, indicating high certainty in the classification, which aligned with the strong localization as shown by the IoU scores. These IoU scores were calculated by comparing the predicted bounding boxes in Figure 1 wiith the ground truth annotations shown in Figure 2. These values demonstrate that the model performs well in identifying both large and smaller objects within complex scenes. The comparison between the two figures highlights the model’s strong alignment with the actual object locations, despite the differences in the exact bounding box coordinates.

To further assess the model’s performance, additional test cases were analyzed and are presented in the Supporting Information (Figures S2–S3). These examples include a more complex scene (Image ID 16228) containing multiple people, a streetcar, a horse, a bench, and other objects. As shown in Figure S2 (ground truth) and Figure S3 (model predictions), the model accurately identifies most objects, though some bounding boxes are slightly misaligned, especially around object edges and overlaps. The average IoU calculation for all of the objects was 0.783. These results indicate precise localization despite overlapping objects and scene complexity. The mean Average Precision (mAP) across these categories was 0.633, supporting the conclusion that the YOLOv8 model performs effectively even in dense and varied environments.

DISCUSSION.

The results from evaluating the YOLOv8 model reveal significant insights into its performance in object detection tasks. The model showed an excellent ability to identify and localize objects, as evidenced by the relatively high Intersection over Union (IoU) score of 0.7578 for the dog in Image ID 221693. This result aligns with the hypothesis that the YOLOv8 model would effectively detect and localize objects within images, showcasing its capability to produce reliable predictions in less complex scenarios.

However, the IoU score for the frisbee was 0.9011, which indicates strong localization accuracy. This demonstrates that the model can effectively detect and localize smaller objects when they are clearly visible and not heavily occluded. This finding challenges common assumptions that models consistently struggle with smaller items, suggesting that performance can vary significantly depending on object’s visibility, background complexity, and contrast with surroundings.

In addition to object detection performance, the text-to-speech (TTS) system was tested to assess its ability to announce detected objects and their quantities. For a complex scene such as Image ID 16228 (Figure S1), the system successfully identified and verbally announced each object, including people, the horse, a bench, and the streetcar. It correctly reported the number of each object type (e.g., “three people, one horse, one bench”), confirming that the TTS integration was capable of handling multi-object environments without repetition or omission. This demonstrates the system’s potential for real-time accessibility applications, particularly in visually dense or dynamic settings.

Overall, while the model shows high precision in detecting the key objects, small shifts in box placement–especially around the edges–indicate that further refinement could improve the model’s performance on complex scenes. Continued evaluation and adjustment of the model’s parameters may enhance its ability to accurately localize objects in various environments.

The significance of these results lies in their implications for the practical application of the YOLOv8 model in real-world scenarios. For tasks requiring precise localization of various object sizes, this model shows promise, but the vulnerability in performance based on object characteristics shows that further optimization is necessary. Specifically, the model’s weakness in detecting smaller objects like the frisbee shows that more training data focused on instances of these types of instances, or the implementation of additional data augmentation techniques could enhance overall accuracy.

Even with encouraging results, this study has limitations. While the COCO validation set includes 5,000 images and covers 80 object categories across a wide variety of indoor and outdoor scenes, this analysis focused on a small number of selected examples. For a more statistically representative evaluation, the model should ideally be tested on several hundred randomly sampled images to account for the full range of object types, lighting conditions, and spatial arrangements. Although the COCO dataset is relatively diverse — including people, animals, vehicles, food, and household items — it does not fully capture certain real-world challenges, such as low-light environments, motion blur, rare object categories, or culturally specific scenes. These gaps can affect how well the model generalizes unfamiliar settings, and future studies should incorporate additional datasets or targeted test cases to evaluate robustness more comprehensively. The program’s ability to accurately identify and announce a variety of objects–such as people, furniture, and food, indicates its potential to increase accessibility for visually impaired users. This is the same with previous studies highlighting the use of AI-driven object detection combined with TTS technologies in assisting individuals with visual impairments [8]. While the model performs well in ideal conditions, its accuracy was noticeably lower for objects that were underrepresented in the training dataset, such as a pen. Limited exposure to these categories during training made them more difficult for the model to detect reliably, especially under varying lighting conditions. These challenges require further refinement, as indicated by previous work on object detection, which emphasizes the importance of robust models in diverse environments [8].

Additionally, incorporating a 4-second pause between the announcement of the objects is beneficial for speech clarity. Still, it might not be suitable for all scenarios, particularly in fast-paced environments where real-time information is crucial. The limitations of this study are open to future research. Making the model adaptable to different lighting and environmental could improve its robustness. As well as exploring the use of advanced speech technologies will further enhance the user’s experience by providing more natural-sounding and contextually aware verbal feedback. Other potential enhancements would be adding segmentation masks or leveraging larger datasets or more complex models. This would improve the detection capabilities in challenging places, which could ultimately help create effective assistive devices.

CONCLUSION

This project aimed to create a custom object detection system that uses text-to-speech technology to improve real-time object detection and communication of the number of objects. The results demonstrated that the YOLOv8 model effectively identified a variety of objects, which can increase accessibility for visually impaired users. The integration of recognizing the number of different objects and optimizing speech pacing has added to a more intuitive user experience, aligning with existing literature that emphasizes the importance of such systems in accessibility applications [9].

Furthermore, the implementation of the TTS technology allows for a seamless interaction between the user and the system. By converting the detected objects into audible feedback, users are empowered to receive immediate information about their environment without needing to rely solely on visual cues. This is particularly beneficial in complex or dynamic settings where visually navigating can be challenging. The ability to communicate not just the presence of objects but also their quantities enhance the impact of the information being conveyed, creating a more engaging and informative experience.

The findings of this project also highlight the potential for expanding the system’s capabilities to support additional features, such as contextual information about the detected objects or even personalized settings based on user feedback can further tailor the experience to individual needs. Such improvements would contribute to a more diverse solution, making it easier for visually impaired users to interact with their surroundings.

Moreover, this research aligns with broader efforts to promote inclusivity through technology. By developing systems that prioritize accessibility, the way is paved for advancements that can transform how individuals with disabilities interact with the world around them. This project contributes to a growing body of work that seeks to leverage machine learning and artificial intelligence to create smarter, more responsive environments that help meet diverse user needs.

Ultimately, this project not only demonstrates the effectiveness of the YOLOv8 model in object detection but also underscores the significance of using assistive technologies to improve accessibility. The results provide a strong foundation for future research and development in this area, encouraging continued exploration of innovative solutions that enhance the quality of life for visually impaired individuals and promote greater inclusivity in technology.

ACKNOWLEDGMENTS.

I would like to thank my mentor, Rahul Sarkar, for his invaluable guidance and support throughout this project. His help with troubleshooting bugs, setting up my system, and providing insights into the development process was crucial in bringing this project to completion.

SUPPORTING INFORMATION.

Supporting Information includes Figures S1-3 for another comparison between True Bounding Boxes from the annotation file and the predicted bounding boxes predicted by the model and a visual graph of how the IoU is calculated for the dog image above.

REFERENCES

1 A. Roy, J. Mukherjee, Object detection and text-to-speech for visually impaired using deep learning. Int. J. Innov. Res. Comput. Commun. Eng. 7, 1874–1880 (2019).

- J. Redmon, A. Farhadi, YOLOv3: An incremental improvement. arXiv (2018). https://arxiv.org/abs/1804.02767

- R. Joshi, M. Tripathi, A. Kumar, M. S. Gaur, Object recognition and classification system for visually impaired. In: 2020 International Conference on Communication and Signal Processing (ICCSP), IEEE, pp. 1568–1572 (2020).

- M. Parker, A. Wahab, Efficient object detection systems for accessibility: A comprehensive review. J. Hum.-Comput. Interact. 36, 112–126 (2020).

- COCO Dataset: Common Objects in Context. https://cocodataset.org/ (Accessed September 2024).

- YOLOv8 Documentation, Ultralytics. https://docs.ultralytics.com/ (Accessed September 2024).

- pyttsx3 Documentation: Text-to-Speech for Python. https://pyttsx3.readthedocs.io/ (Accessed October 2024)

- S. Ren, K. He, R. Girshick, J. Sun, Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 28 (2015).

- R. Pradeep, M. Gupta, A. Agarwal, Assistive object detection and recognition for the visually impaired using AI and TTS. J. Assist. Technol. 15, 89–98 (2021).

Posted by buchanle on Wednesday, June 25, 2025 in May 2025.

Tags: Accessibility, Object Detection, Real-Time System, Text-to-Speech, Visually Impaired